Research Overview

The emerging fields of autonomous systems and biomechatronics requires an interdisciplinary effort to tackle the increasing demand to use intelligent robots and devices to assist humans in critical applications including elderly/patient care, search and rescue, civilian and military transportation, and surveillance/exploration. As these systems enter the aforementioned real world scenarios, their performance in accomplishing complex tasks or solving problems is highly dependent on their perception and understanding of potentially unknown or cluttered environments. Hence, major advances are needed in robot/device mechanical design, sensor techniques, sensor information interpretation and control architectures to allow these systems to explore and interact in varying 3D environments which may contain humans.

Research Activities

- Assistive Robotics and Assistive Devices

- Social and Personal Robots

- Service Robotics and Vehicles

- Robot-Assisted Emergency Response

- Sensor Agents

- Human-Robot Interaction

- Intelligent Perception

- Affective Computing

- Task-Driven Control and Semi-Autonomous Control

- 3D Simultaneous Mapping and Localization

- Environment and Health Monitoring

- 3D Sensory Systems

- Multi-Robot Teams

- Person Search in Human-Centered Environments

Assistive Devices

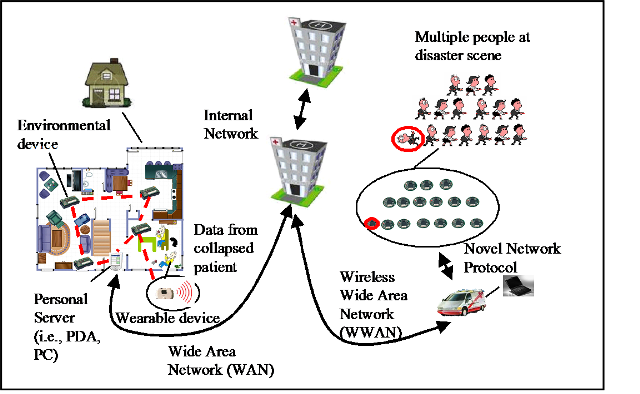

The objective of our research work is to develop multi-modal sensory systems that are integrated in heterogeneous health monitoring devices. The devices will be wearable on the patient and some will be placed inside the environment in which the patient occupies. Together these devices can provide effective remote health monitoring of a person and also inform the person of important information.

Funding Sources: The Natural Sciences and Engineering Research Council of Canada (NSERC) and The New York State Center of Excellence in Wireless and Information Technology (CEWIT)

Lower-body Exoskeleton Devices

We also focus on the development of control schemes for wearable lower-body exoskeleton devices, for use in post-stroke gait rehabilitation. Persons post-stroke often experience reduced motor control which can affect their overall gait pattern. Improving motor performance and function via robotic exoskeletons is a key goal of our post-stroke rehabilitation research.

To validate gait patterns and control schemes to be used in exoskeleton control, simulations prior to hardware implementation are vital for ensuring patient safety and comfort. Our research has so far focused on the development of novel centralized software architectures that allow for both the simulation and control of exoskeletons, in order to allow for the ease in prototyping, validation and testing of gait patterns.

This ultimately allows for a desired gait pattern to be controlled, adjusted, and directly verified by physiotherapists on-the-fly during gait rehabilitation sessions, both in simulation and on a real exoskeleton. We are also currently investigating the use of machine learning techniques for learning patient-specific exoskeleton control schemes.

Video:

Video 2:

https://vimeo.com/showcase/7661752/video/467177802

Published in:

L. Rose, M. C. F. Bazzocchi, C. de Souza, J. Vaughan-Graham, K. Patterson, G. Nejat, "A Framework for Mapping and Controlling Exoskeleton Gait Patterns in Both Simulation and Real-World," Design of Medical Devices Conference, DMD2020-9009, 2020. (PDF)

L. Rose, M. C. F. Bazzocchi, and G. Nejat, “End-to-End Deep Reinforcement Learning for Exoskeleton Control,” IEEE Conference on Systems, Man and Cybernetics, October 14, 2020.

Funding Sources: The Natural Sciences and Engineering Research Council of Canada (NSERC), The University of Toronto EMHSeed Fund, and the Canada Research Chairs Program.

Assistive Robotics

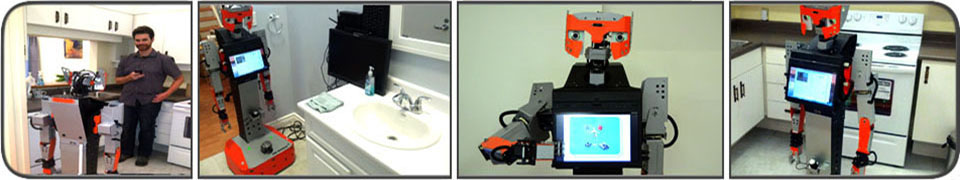

This research focuses on the development and use of innovative robotic technologies to provide person-centered cognitive interventions to improve the quality of life of elderly adults. Namely, the objective of this work is to develop intelligent assistive robots to engage individuals in social human-robot interactions (HRI) in order to maintain or even improve residual social, cognitive and affective functioning.

Specifically, this work involves the design and development of the sensory systems and HRI control architectures for robotic technology to facilitate natural and realistic social interactions during activities of daily living and cognitive exercises for elderly individuals. The control architectures will allow a robot to monitor activities and determine the emotional state and intent of the user. Thus, as an informed intelligent agent, the robot can act as a social motivator by providing appropriate learned behaviours such as cues, words of encouragement, task assistance, and congratulations to facilitate the completion of tasks.

As the population of the world is aging rapidly, this increase in the elderly population is putting a strain on healthcare systems as older adults use a disproportionately large portion of the available healthcare services. While the demand for healthcare is increasing, large numbers of

healthcare workers who are a part of this aging population are also retiring. The drastic increase in the number of older adults that need care and the decline in the number of healthcare workers could result in the diminishing of the quality of elder care.

This is particularly true for long-term care facilities, where frail older adults that have more complex cognitive and/or physical needs usually live. For these individuals, it is important to provide quality care while also delivering programs that can enhance their independent abilities, increase their social engagement and provide cognitive stimulation.

The importance of such activities to promote active and healthy living has been well documented to be lacking in long-term care facilities. Both social and cognitive stimuli have been found to promote the psychological well-being of older adults and minimize the risk of social isolation. The implementation of such interventions requires considerable resources and people, and implementing and sustaining them on a long-term basis can be very complex and time-consuming for healthcare staff working in long-term care facilities. An alternative approach that could be as effective is through the use of autonomous assistive robots for the implementation of such interventions.

Videos:

An Intelligent Socially Assistive Robot-Wearable System for Personalized User Dressing Assistance, June, 2023:

Proof of concept video for Tangy learning from demonstration for high-level tasks from non-expert human demonstrations, January 4, 2017:

Tangy facilitating a Bingo game, a demonstration of the robot's capabilities and the target application, June 7, 2015:

Brian 2.1's interactive abilities being shown and discussed by Prof. Nejat, February 14, 2013:

Casper the friendly robot assisting in the kitchen, a demonstration of the robot's capabilities and target application, Sept 4th, 2013:

"Brian 2.0" our socially assistive healthcare robot playing the memory game, March 19, 2010:

"Brian 2.0" our socially assistive healthcare robot displaying body language and facial expressions, August 13, 2010:

Funding Sources: Natural Sciences and Engineering Research Council of Canada (NSERC), the Canada Research Chairs (CRC) Program, AGE-WELL (which is supported by the Government of Canada through the Networks of Centres of Excellence (NCE)), the Connaught Award, Canada Foundation for Innovation (CFI), Canadian Institutes of Health Research (CIHR) and Ontario Research Fund (ORF).

Human-Robot Interaction

Human-robot interaction (HRI) addresses the design, understanding, and evaluation of robotic systems which are used by people or work alongside them. These robots interact through various forms of communication in different real-world environments. Namely, HRI encompasses both physical and social interactions with a robot in a broad range of applications, such as physical and cognitive rehabilitation, tele-operation for surgery, surveillance and others. Through understanding these interactions we can better design robots to suit the functional, ergonomic, aesthetic, and emotional requirements of different user. One user group especially of importance in our research is the elderly population.

Intelligent Perception

The Intelligent perception research area is related to the investigation of complex objects and operational processes by means of multi-sensorial data provided by sensors which differ in spatial, temporal, and spectral resolutions and in the output sensory format. During perception, hypotheses are formed and tested about percepts that are based on three criteria: sensory data, knowledge, and high-level cognitive processes.

Person Search in Human-Centered Environments

This research focuses on the development of socially assistive robots which can find and assist users who are not initially co-located with the robots. The environments considered include: 1) houses in which a single elderly resident may live alone, as well as 2) large buildings such as long-term care facilities in which multiple elderly users may reside.

Single-User Robot Search in Human-Centered Environments

There is an increasing population of people living with dementia. In the later stages of dementia, these individuals may lose the ability to perform activities of daily living and become more dependent on family members and healthcare providers. Therefore, there is a demand for socially assistive robots to help seniors with activities of daily living at home and improve their quality of life.

These robots are expected to perform tasks such as providing instructions on activities of daily living (e.g.. teaching user how to prepare a meal), giving reminders (for eating a meal, taking medications, going to a doctor's appointment etc.), and allow the user to communicate with family members or healthcare providers through telepresence. In these scenarios, the robot often have to find the user first before initiating a social interaction, thus justifying the need for a person search strategy. The significance of developing an efficient person search technique is that it allows the robot to find the user in a timely manner, reduce power consumption of the robot and increase the user's perceived intelligence of the robot.

User Data:

http://asblab.mie.utoronto.ca/sites/default/files/User_pattern_data_0.zip

Videos:

Published In:

S. Lin, and G. Nejat, “Robot Evidence Based Search for a Dynamic User in an Indoor Environment,” ASME International Design Engineering Technical Conferences & Computers and Information in Engineering Conference, 2018. (pdf)

Funding Sources:

This work was funded by the Natural Sciences and Engineering Research Council of Canada, the Ontario Centres of Excellence, the Canada Research Chairs Program, and CrossWing Inc.

Multi-User Robot Search in Human-Centered Environments

A rapidly aging demographic is putting increased strain on families, long-term care providers, and the healthcare system. This includes in retirement homes and long term care facilities, where staff can feel overworked. To address this problem, we are investigating the use of socially assistive robots for assisting with non-contact medial/repetitive tasks. Example tasks include: 1) reminders, 2) telepresence, and 3) recreational activities (e.g.. Bingo and Trivia). However, in order to initiate the majority of such tasks, the residents and robot are not necessarily co-located within the environment. As a result, the robot must be able to perform searches for a single person or a group of multiple people at various times through out the day.

For our multi-user robot search in human-centered environments, a robot is required to look for multiple users within a specific time frame. The search objective is to have the robot implement a search plan which maximizes the expected number of target persons found. The approach to this problem can be decomposed into 3 sub-problems: 1) model the user behaviors, 2) generate a robot search plan based on user behaviors, and 3) implement and execute the plan on a robot.

Modeling the user behaviors must consider both the spatial and temporal location probabilities of the users. In our research we relate the user spatial temporal location probabilities to the activities which the users perform in the environment. Simulated user data, based on real-world long-term care schedules, have been used to conduct experiments. This data can be found below, under User Activity Data and Maps.

Generating robot search plans based on user spatial temporal models requires the planner to consider the current location of the robot, as well as all previously searched locations. For a variety of planning approaches, generating a plan quickly becomes computationally expensive and cannot be performed in real time. As a result, to generate plans in real-time, it is often the case where the optimal solution must be approximated, in which case we decompose the problem into sub-problems. This is discussed in more detail in our published works which can be found below, under Published In.

Implementation and execution requires the robot to navigate efficiently and safely within the human-centered environment, as well as use vision processing technique to identify people in the environment. As people in the environment will be facing various directions relative to the robot as it moves within the environment, the robot must be capable of detecting a person from any direction, determining the front of the user, and then moving to the front of the user to determine their identity based on their face.

The overall search problem and its 3 sub-problems are discussed in detail in our published works which can be found below, under Published In.

User Activity Data and Maps:

http://asblab.mie.utoronto.ca/sites/default/files/User_Activity_Data_and...

Videos:

Published In:

1. S. Mohamed and G. Nejat, “Autonomous Search by a Socially Assistive Robot in a Residential Care Environment for Multiple Elderly Users Using Group Activity Preferences” International Conference on Automated Planning and Scheduling (ICAPS) Workshop on Planning and Robotics, 2016. (DOI)

2. K. E. C. Booth, S. C. Mohamed, S. Rajaratnam, G. Nejat, and J. C. Beck, “Robots in Retirement Homes: Person Search and Task Planning for a Group of Residents by a Team of Assistive Robots,” IEEE Intelligent Systems, vol. 32, iss. 6, pp. 14-21, 2017. (pdf)

3. Person Finding: An Autonomous Robot Search Method for Finding Multiple Dynamic Users in Human-Centered Environments. (under review)

Funding Sources:

This work was supported by the Natural Sciences and Engineering Research Council of Canada (NSERC), Dr. Robot Inc., the Ontario Centres of Excellence (OCE), the Canadian Consortium on Neurodegeneration in Aging (CCNA), and the Canada Research Chairs program (CRC).

Robot-Assisted Emergency Response

The objective of our research is to develop a team of collaborative mobile rescue robots that can be deployed in the field, and to study human-robot cooperation between teams of rescue workers/volunteers and the robots, as well as multi-robot coordination between different robotic team members, in order to optimize robot design to meet the needs of rescue workers/volunteers in time-critical rescue missions. In a time critical SAR context, it is almost impossible for a single rescue robot to address all the challenges offered by the environments. As a result, teamwork, including human-robot cooperation and multi-robot coordination, become essential for rescue robots.

Sensor Agents

The objective of this research work is to develop sensor agents for surveillance and monitoring applications in unknown or potentially dangerous environments.

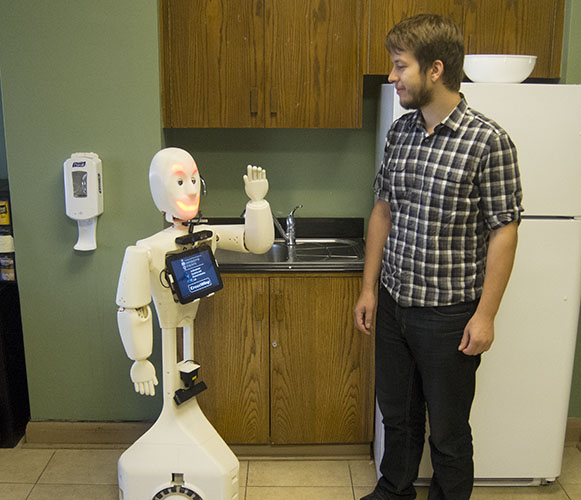

Social and Personal Robots

Our research in this area focuses on the development of human-like social robots with the social functionalities and behavioral norms required to engage humans in natural assistive interactions such as providing: 1) reminders, 2) health monitoring, and 3) social and cognitive training interventions. In order for these robots to successfully partake in social HRI, they must be able to interpret human social cues. This can be achieved by perceiving and interpreting the natural communication modes of a human, such as speech, paralanguage (intonation, pitch, and volume of voice), body language and facial expressions.

Interactive robots developed for social human-robot interaction (HRI) scenarios need to be socially intelligent in order to engage in natural bi-directional communication with humans. Social intelligence allows a robot to share information with, relate to, and interact with people in human-centered environments. Robot social intelligence can result in more effective and engaging interactions and hence, better acceptance of a robot by the intended users.

Brian 2.1 during a one-on-one interaction.

We have been developing automated real-time affect (emotion, mood and attitude) detection and classification systems to detect and classify natural modes of human communication, including:

1) body language,

2) paralanguage, and

3) facial expressions

for our social robots to interpret in order to effectively engage a person in a desired activity by displaying appropriate emotion-based behaviours.

Videos:

Example Brian 2.1 behaviours during accessibility-aware Robot Tutor and Robot Restaurant Finder interactions:

Shane Saunderson presenting on the importance of considering communication directness and robot familiarity in HRI:

Funding Sources: Natural Sciences and Engineering Research Council of Canada (NSERC), the Canada Research Chairs (CRC) Program, AGE-WELL (which is supported by the Government of Canada through the Networks of Centres of Excellence (NCE)), Canada Foundation for Innovation (CFI) and Ontario Research Fund (ORF).

Swarm Robotics and mROBerTO

Swarm robotics is an area of research within multi-robot systems, which consists of physical robots exhibiting intelligence and collective behaviors through local interactions directly between the robots. In this context, we designed and implemented a novel small (16×16 mm2) modular millirobot, mROBerTO (milli-ROBot-TOronto), in order to experiment with collective-behaviour algorithms. These robots have potential use in a wide range of applications, such as environment monitoring and surveillance, micro-assembly, medicine, and search and rescue.

The primary design objectives for our robot were to address the above mentioned issues: maximum (i) modularity, (ii) use of off-the-shelf components, and (iii) processing and sensing, as well as minimum footprint. mROBerTO comprises four modules: mainboard, locomotion, primary sensing, and secondary sensing.

Our modular design allows changes and upgrades to individual modules with little or no disruptions to other modules. Using mostly off-the-shelf components allows easy assembly, production, and maintenance. Small footprint allows more robots to operate in smaller workspaces. Improved processing and sensing capabilities is essential for implementing and testing complex behaviors and tasks.

Below is a brief video of mROBerTO’s workings:

Published in: Justin Yonghui Kim, Tyler Colaco, Zendai Kashino, Goldie Nejat, and Beno Benhabib. “mROBerTO: A Modular Millirobot for Swarm-Behavior Studies,” 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2109-2114.

Funding Source: This research is funded by the Natural Science and Engineering Research Council of Canada.